IoT EDGE Simplified using Azure

Youtube video part 1 : https://youtu.be/e6Qo0fABPrc

Youtube video part 2 : https://youtu.be/IJ06JRosTMo

Youtube video part 3 : https://youtu.be/91_QPyKt6JI

Azure EDGE-First Version (Azure IoT devices)

Microsoft Azure is providing combination of cloud and “device-sdk” to develop EDGE side software, cloud side ingestion and processing services to develop IoT projects. Using the first version of sdk, we can design the EDGE software which can communicate to various sensors and real time data sources. The EDGE software would be a single application in which the different communication methods need to be programmed based on the nature of sensors/sources.

If there is any issue in the EDGE software, the software itself or its library files need to be updated. In IoT projects, the field gateways are not monitored physically on regular basis, which is not practically possible. Any manual operation on the field gateway are costly and time consuming. As the EDGE software is a single application, we may need to update the entire edge software in the case of minor issues.

So it is required to update the EDGE software remotely. Remotely means, the administrators who can monitor the gateway should be able to control the gateway, and using a command, the gateway should download the software from a cloud/external repository and it should update its-own similar to our mobile phone OS upgrade.

Have you done OS upgrades of your cell-phone? We would be facing suspicious minutes till we get our mobile phone’s home screen and its data back when we are upgrading it right?

All these process of download and upgrade the software by its own is a risky activity and this is a real challenge. Any issue while upgrading the gateway software may lead to complete data loss or device software crash. If the device’s EDGE software is not in a state to respond to the commands from cloud system, a manual operation is required to set it back.

Azure EDGE-V2 (Azure IoT Edge)

In the second version, Azure is providing a better option which is known as IoT Edge.

The EDGE software can be programmed and containerized. EDGE software need to be designed in ‘module’ wise. That means, rather than developing the EDGE software as a single program to communicate to all type of sensors or machines, we can develop one module for one type of sensors/data source. One module to communicate to one source of MODBUS registers (Slave).

Another module to communicate to one type serial temperature sensor. If we need to manage multiple MODBUS sensors, we need to use multiple instances of MODBUS modules in the gateway with different names. Here I am trying to elaborate a simple use case which is understandable to any readers. The end to end solution preparation is also trying to cover here and how can we develop an end to end solution which can be controlled from cloud. I am trying to list down the major challenges also here. Some portion of my code also provided for the technical readers.

Use Case Implementation

Smart plug

We are building a smart AC plug which can be used to power electrical equipment like table fan. This smart plug can be controlled from anywhere through internet. This can be monitored using an interface.Expectation

One smart plug is considered as a single device. Each device is connected to a gateway. User can control and monitor each smart plug using an interface software. User need to know the health of gateway to device connection as well.The design

We can use Azure IoT Edge module to manage the device from the gateway. NodeMCU can be used as a controller to control the AC flow to the smart plug. Gateway and NodeMCU can be connected using WiFi.

The target to achieve ?

Need a solution to control an electrical equipment like a table FAN remotely.

Expectations

User need to interact to the gateway using user interface. Based on the device management commands from cloud, the gateway should be able to control the device connected to it. The user should be able to know the health of gateway to device connection.

The design / plan

We can use Azure IoT Edge module to manage the device from the gateway. NodeMCU is planned to use as a device. Gateway and NodeMCU can be connected using a common WiFi router.

Module twin properties

Module twin desired properties would be used to communicate the device management commands to EDGE Module. All the device controls are passing using the device desired property. Module twin reported property is using to communicate all the device level status to the cloud. EDGE module would be updating the reported property of module twin when there is an update in the device status. This reported property can be read from cloud side.

Heart-beat to know gateway-device link

NodeMCU will be sending the heart beat with the device status in JSON format to gateway as an MQTT message to “Out-topic” topic. Gateway is keeping track on for the last heart beat time from a device, once received the heartbeat, gateway will update the last heartbeat received time. If the heartbeat from a device is not received till to a specific time, gateway will update the status in its reported property and then the cloud can read it.

Setting up Azure

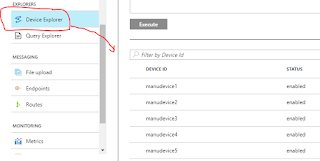

1. Create IoT hub in Azure portal under your subscription.2. Create an IoT EDGE device with name “device1”

Setting up the gateway

1. Install Ubuntu 16 OS in gateway.

2. Install IoT EDGE run time in Gateway. (https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/iot-edge/how-to-install-iot-edge-linux.md)

3. Configure the /etc/iotedge/config.yaml file by adding the IoT device connection string from Azure portal.

4. Install Mosquitto broker in gateway.

http://www.steves-internet-guide.com/install-mosquitto-linux/

Test the gateway for mosquito installation with the below command.

Open a terminal and type

mosquitto_sub –t “test”

Open another terminal and type

mosquitto_pub –t “test” –m “testing message”

You can the messages in the subscribing screen, if the installation is proper.

Setting up NodeMCU

We can program the Node MCU using Arduino editor. Use the below link to install Node MCU board to Arduino editor.

2. Install IoT EDGE run time in Gateway. (https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/iot-edge/how-to-install-iot-edge-linux.md)

3. Configure the /etc/iotedge/config.yaml file by adding the IoT device connection string from Azure portal.

4. Install Mosquitto broker in gateway.

http://www.steves-internet-guide.com/install-mosquitto-linux/

Test the gateway for mosquito installation with the below command.

Open a terminal and type

mosquitto_sub –t “test”

Open another terminal and type

mosquitto_pub –t “test” –m “testing message”

You can the messages in the subscribing screen, if the installation is proper.

Setting up NodeMCU

We can program the Node MCU using Arduino editor. Use the below link to install Node MCU board to Arduino editor.

https://www.teachmemicro.com/intro-nodemcu-arduino/

Develop NodeMCU program

Eg: {SWITCH:0}

NodeMCU will be subscribing this message from the topic, and it will “low” the signal

on the pin D2, and inbuilt LED also indicate the status of D2 pin.

[Inbuilt LED will glow if signal is “low” and LED will off, if signal is “high”]

- One of output PIN (D2) of NodeMCU along with its inbuilt LED is using for this program.

- NodeMCU need to publish the heat beat to the gateway as an MQTT message in an

- interval of 2 seconds.

- The heart beat is a JSON message of the status of the inbuilt -LED.

- NodeMCU will be subscribing to the gateway on a topic “test1”.

- If the gateway is receiving the control command of the device(ON/OFF), the gateway

- will be publishing that to the topic test, which is subscribing by the NodeMCU.

Eg: {SWITCH:0}

NodeMCU will be subscribing this message from the topic, and it will “low” the signal

on the pin D2, and inbuilt LED also indicate the status of D2 pin.

[Inbuilt LED will glow if signal is “low” and LED will off, if signal is “high”]

Controlling AC supply using NodeMCU

A relay circuit is using to control AC supply. This relay is controlled by the output pinD2 of NodeMCU.

NodeMCU D2 pin ----------> IN pin of relay.

NodeMCU 3v3 pin ---------> VCC of relay.

NodeMCU GND pin --------> GND of relay.

AC Socket has to go through the relay, and preferably connect phase of AC.

Develop IoT Edge Module – Hot eye

Have used VS Code and Ubuntu 18 for this purpose.

The installation steps

https://docs.microsoft.com/en-us/azure/iot-edge/how-to-vs-code-develop-module

Below are the important methods implemented in this IoT EDGE module

1. static Task OnDesiredPropertiesUpdate(TwinCollection desiredProperties,object userContext)

Static variables are used to store the desired property values fromThis is the method which is listening for any “Desiredproperty” changes.

module twin using this method.

2. await

ioTHubModuleClient.UpdateReportedPropertiesAsync(reportedProperties);

This method is used to update the reported properties with the

connection status.

3. Publish message to topic “outtopic”

The topic name is passing through the desired properties, and the

commands to the NodeMCU is sending as message to the “outtopic”

4. Subscribe from topic “intopic”

The topic name is passing through the desired properties, and the

NodeMCU is sending the status as heartbeat to this topic.

5. RunAsync : This method will run continuously and the delay between two

consecutive heartbeat is mentioned in this.

Develop an Interface to manage

Developed an interface to manage all the operation on device. This windows application is developed in C#, using windows forms.

Below are the functionalities

1. Deploy a module.

2. Un install all the modules.

3. Set configurations.

4. Control button to “SWITCH-ON and SWITCH-OFF” smart plug.

5. Know the status of “edgeHub”, “edgeAgent”

6. Know the status of “Hot-eye” module.

7. Know the status of gateway to device link.

The user can click the “SWITCH” button and then this interface will update the moduletwin desired property “SWITCH” to 1. Azure will sync this desired property to the device. In device, the module will get a callback (OnDesiredPropertiesUpdate). This method will send a message to the outtopic in JSON format. {SWITCH:1}

Below are the functionalities

1. Deploy a module.

2. Un install all the modules.

3. Set configurations.

4. Control button to “SWITCH-ON and SWITCH-OFF” smart plug.

5. Know the status of “edgeHub”, “edgeAgent”

6. Know the status of “Hot-eye” module.

7. Know the status of gateway to device link.

The major Azure APIs and methods used

1. To deploy a new module to device Azure API

https://<>/devices/edgedevice100/applyConfigurationContent?apiversion=2018-06-30

2. To update Module twin https://IOT-Kochi3.azure-devices.net/twins/edgedevice100/modules/manumodule7?apiversion=2018-06-30

3. RegistryManager.CreateFromConnectionString(connectionString);

4. registryManager.CreateQuery("SELECT * FROM devices.modules where deviceId=xxx”,100); 5. await query.GetNextAsTwinAsync();

Reference links

https://docs.microsoft.com/bs-latn-ba/azure/iot-edge/iot-edge-runtime

https://github.com/MicrosoftDocs/azure-docs/blob/master/articles/iot-edge/how-toinstall-iot-edge-linux.md

http://www.steves-internet-guide.com/install-mosquitto-linux/

https://www.teachmemicro.com/intro-nodemcu-arduino/

https://docs.microsoft.com/en-us/azure/iot-edge/how-to-vs-code-develop-module

Important Linux commands required during development and deployment

sudo systemctl restart iotedge

sudo journalctl -f -u iotedge

sudo iotedge list

sudo iotedge check

sudo iotedge logs <> -f